Methodology

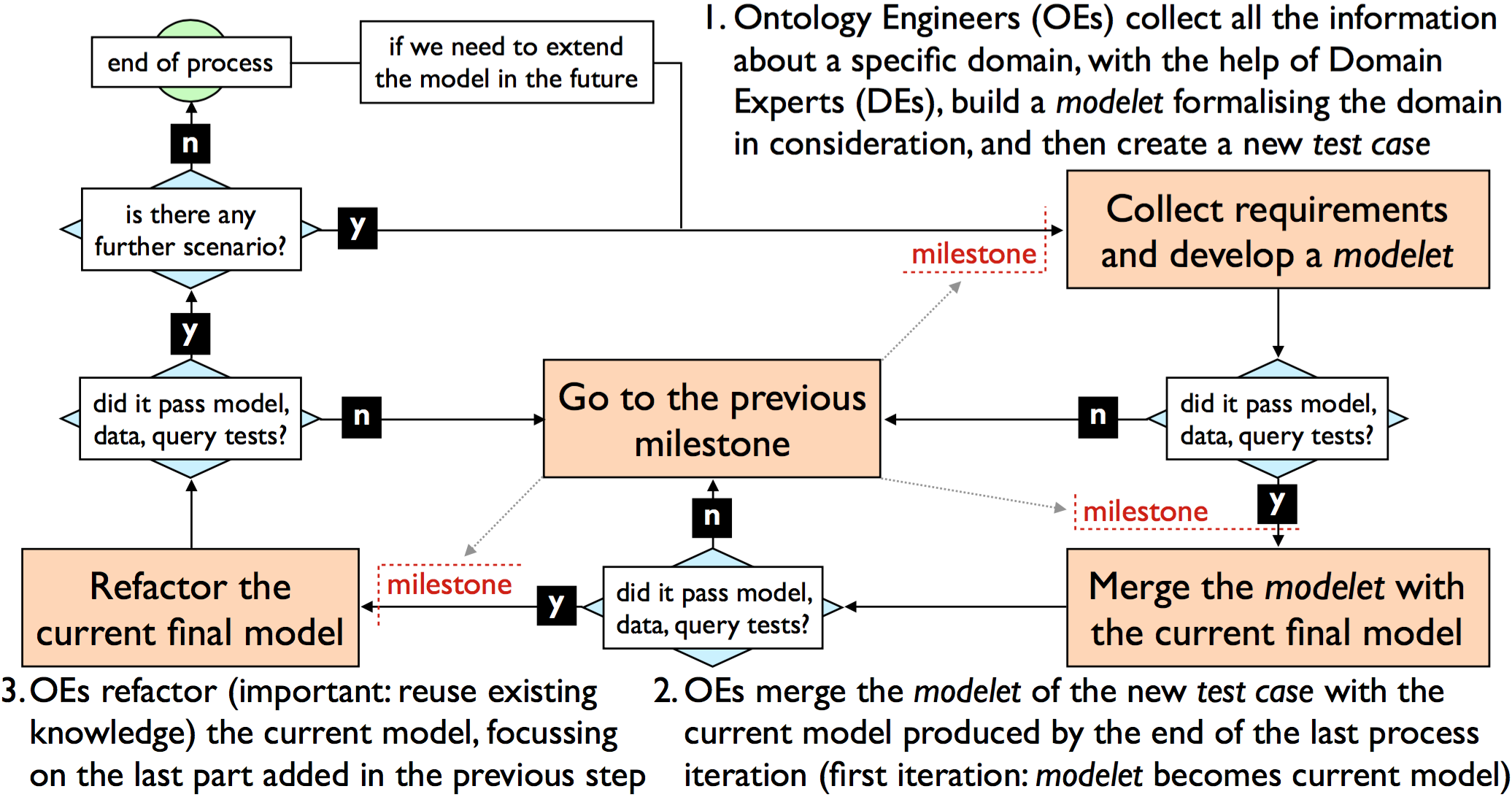

SAMOD is based on the following three iterative steps (briefly summarised in ) – where each step ends with the release of a snapshot of the current state of the process called milestone:

-

OEs collect all the information about a specific domain, with the help of DEs, in order to build a modelet formalising the domain in consideration, following certain ontology development principles, and then create a new test case that includes the modelet. If everything works fine (i.e., all model, data and query test are passed) release a milestone and proceed;

-

OEs merge the modelet of the new test case with the current model produced by the end of the last process iteration, and consequently update all the test cases in BoT specifying the new current model as TBox. If everything works fine (i.e., all model, data and query test are passed according to their formal requirements only) release a milestone and proceed;

-

OEs refactor the current model, in particular focussing on the last part added in the previous step, taking into account good practices for ontology development processes. If everything works fine (i.e., all model, data and query test are passed) release a milestone. In case there is another motivating scenario to be addressed, iterate the process, otherwise stop.

The next sections elaborate on those steps introducing a real example considering a generic iteration in.

Define a new test case

OEs and DEs work together to write down a motivating scenario MSn, being as close as possible to the language DEs commonly use for talking about the domain. An example of motivating scenario is illustrated in .

| Name |

Vagueness of the TBox entities of an ontology |

|---|---|

| Description |

Vagueness is a common human knowledge and language phenomenon, typically manifested by terms and concepts like High, Expert, Bad, Near etc. In an OWL ontology vagueness may appear in the definitions of classes, properties, datatypes and individuals. For these entities a more explicit description of the nature and characteristics of their vagueness/non-vagueness is required. Analysing and describing the nature of vagueness/non-vagueness in ontological entities is subjective activity, since it is often a personal interpretation of someone (a person or, more generally, an agent). Vagueness can be described according to at least two complementary types referring to quantitative or qualitative connotations respectively. The quantitative aspect of vagueness concerns the (real or apparent) lack of precise boundaries defining an entity along one or more specific dimensions. The qualitative aspect of vagueness concerns the identification of such other discriminants of which boundaries are not quantifiable in any precise way. Either a vagueness description, that specifies always a type, or a non-vagueness description provides at least a justification (defined either as natural language text, an entity or a more complex logic formula, or any combination of them) that motivates a specific aspect of why an entity should be intended as vague/non-vague. Multiple justifications are possible for the same description. The annotation of an entity with information about its vagueness is a particular act of tagging done by someone (i.e., an agent) who associates a description of vagueness/non-vagueness (called the body of the annotation) to the entity in consideration (called the target of the annotation). |

| Example 1 |

Silvio Peroni thinks that the class TallPerson is vague since there is no way to define a crisp height threshold that may separate tall from non-tall people. Panos Alexopoulos, on the other hand, considers someone as tall when his/her height is at least 190cm. Thus, for Panos, the class TallPerson is not vague. |

| Example 2 |

In an company ontology, the class StrategicClient is considered vague. However, the company's R&D Director believes that for a client to be classified as strategic, the amount of its R&D budget should be the only factor to be considered. Thus according to him/her the vague class StrategicClient has quantitative vagueness and the dimension is the amount of R&D budget. On the other hand, the Operations Manager believes that a client is strategic when he has a long-term commitment to the company. In other words, the vague class StrategicClient has quantitative vagueness and the dimension is the duration of the contract. Finally, the company's CEO thinks that StrategicClient is vague from a qualitative point of view. In particular, although there are several criteria one may consider necessary for being expert (e.g. a long-standing relation, high project budgets, etc), it's not possible to determine which of these are sufficient. |

Given a motivating scenario, OEs and DEs should produce a set of informal competency questions CQn, each of them identified appropriately. An example of an informal competency question, formulated starting from the motivating scenario in , is illustrated in .

| Identifier |

3 |

|---|---|

| Question |

What are all the entities that are characterised by a specific vagueness type? |

| Outcome |

The list of all the pairs of entity and vagueness type. |

| Example |

StrategicClient, quantitative StrategicClient, qualitative |

| Depends on |

1 |

Now, having both a motivating scenario and a list of informal competency questions, KEs and DEs write down a glossary of terms GoTn. An example of glossary of terms is illustrated in .

| Term | Definition |

|---|---|

|

annotation of vagueness/non-vagueness |

The annotation of an ontological entity with information about its vagueness is a particular act of tagging done by someone (i.e., an agent) who associates a description of vagueness/non-vagueness (called the body of the annotation) to the entity in consideration (called the target of the annotation). |

|

agent |

The agent who tags an ontology entity with a vagueness/non-vagueness description. |

|

description of non-vagueness |

The descriptive characterisation of non-vagueness to associate to an ontological entity by means of an annotation. It provides at least one justification for considering the target ontological entity non-vague. This description is primarily meant to be used for entities that would typically be considered vague but which, for some reason, in the particular ontology are not. |

|

description of vagueness |

The descriptive characterisation of vagueness to associate to an ontological entity by means of an annotation. It specifies a vagueness type and provides at least one justification for considering the target ontological entity vague. |

|

vagueness type |

A particular kind of vagueness that characterises the entity. |

|

quantitative vagueness |

A vagueness type that concerns the (real or apparent) lack of precise boundaries defining an entity along one or more specific dimensions. |

|

qualitative vagueness |

A vagueness type that concerns the identification of such other discriminants of which boundaries are not quantifiable in any precise way. |

|

justification for vagueness/non-vagueness description |

A justification that explains one possible reason behind a vagueness/non-vagueness description. It is defined either as natural language text, an entity, a more complex logic formula, or any combination of them. |

|

has natural language text |

The natural language text defining the body of a justification. |

|

has entity |

The entity defining the body of a justification. |

|

has logic formula |

The logic formula defining the body of a justification. |

The remaining part of this step is led by OEs only , who are responsible of developing a modelet according to the motivating scenario, the informal competency questions and the glossary of terms .

In doing that work, they must strictly follow the following principles:

-

Keep it small. Keeping the number of developed ontology entities small is essential when developing an ontology. In fact, by making small changes (and retesting frequently, as our framework prescribes), one always has a good idea of what change has caused an error in the model . Moreover, according to Miller , averagely OEm cannot hold in working memory more than a small number of object. Thus, OEm should define at most N classes, N individuals, N attributes (i.e., data properties) and N relations (i.e., object properties), where N is the Miller's magic number

7 ± 2

. -

Use patterns. In thinking what is the best way to model a particular aspect of the domain, OEm should take into consideration existing knowledge. In particular, we strongly encourage to look at documented patterns – the Semantic Web Best Practices and Deployment Working Group page and the Ontology Design Patterns portal are both valuable examples – and at widely-adopted Semantic Web vocabularies – such as FOAF for people, SIOC for social communities, and so on.

-

Middle-out development. Defining firstly the most relevant concepts (the basic concepts) and latterly adding the most abstract and most concrete ones, the middle-out approach allows one to avoid unnecessary effort during the development because detail arises only as necessary, by adding sub- and super-classes to the basic concepts. Moreover, this approach, if used properly, tends to produce much more stable ontologies, as stated in .

-

Keep it simple. The modelet must be designed according to the information obtained previously (motivating scenario, informal competency questions, glossary of terms) in an as quick as possible way, spending the minimum effort and without adding any unnecessary semantic structure. In particular, do not think about inference at this stage, while think about describing the motivating scenario fully.

-

Self-explanatory entities. The aim of each ontological entity must be understandable by humans simply looking at its local name (i.e., the last part of the entity IRI). Therefore, no labels and comments have to be added at this stage and all the entity IRIs must not be opaque. In particular, class local names has to be capitalised (e.g., Justification) and in camel-case notation if composed by more than one word (e.g., DescriptionOfVagueness). Property local names has to be non-capitalised and in camel-case notation if composed by more than one word; moreover, each property local name must start with a verb (e.g., wasAttributedTo) and, in case of data properties, it has to be followed by the name of the object referred (e.g., hasNaturalLanguageText). Individual local names must be non-capitalised (e.g., ceo) and dash-separated if composed by more than one word (quantitative-vagueness).

The goal of OEm is to develop a modeletn, eventually starting from a graphical representation written in a proper visual language, such as UML , E/R model and Graffoo , so as to convert it automatically in OWL by means of appropriate tools, e.g., DiTTO .

Starting from the OWL version modeletn, OEs proceed in four phases:

-

run a model test on modeletn. If it succeeds, then

-

create an exemplar dataset ABoxn that formalises all the examples introduced in the motivating scenario according to modeletn. Then, it runs a data test and, if succeeds, then

-

write formal queries SQn as many informal competency questions related to the motivating scenario. Then, it runs a query test and, if it succeeds, then

-

create a new test case Tn = (MSn, CQn, GoTn, modeletn, ABoxn, SQn) and add it in BoT.

When running the model test, the data test and the query test, it is possible to use any appropriate available software to support the task, such as reasoners (Pellet, HermiT) and query engines (Jena, Sesame).

Any failure of any test that is considered a serious issue by all the OEs results in getting back to the more recent milestone. It is worth mentioning that an exception should be also arisen if OEs think that the motivating scenario MSn is to big to be covered by one only iteration of the process. In this case, it may be necessary to re-schedule the whole iteration, for example split adequately the motivating scenario in two new ones.

Merge the current model with the modelet

At this stage, OEs merge modeletn, included in the new test case Tn, with the current model, i.e., the version of the final model released at the end of the previous iteration (i.e., in-1). OEs have to proceed in three consecutive steps:

-

to define a new model TBoxn merging of the current model with modeletn. Namely, OEs must add all the axioms from the current model and modeletn to TBoxn and then collapse semantically-identical entities, e.g., those that have similar local names and that represent the same entity from a semantic point of view (e.g., Person and HumanBeing);

-

to update all the test cases in BoT, swapping the TBox of each test case with TBoxn and refactoring each ABox and SQ according to the new entity names if needed, so as to refer to the more recent model;

-

to run the model test, the data test and the query test on all the test cases in BoT, according to their formal requirements only;

-

to set TBoxn as the new current model.

Any serious failure of any test, that means something went bad in updating the test cases in BoT, results in getting back to a previous milestone. In this case, OEs have to consider the more recent milestones, if they think there was a mistake in a procedure of this step, or, the milestones before, if the failure is demonstrably given by any of the components of the new test case Tn.

Refactor the current model

In the last step, OEs work to refactor the current model, shared among all the test cases in BoT, and, accordingly, each ABox and SQ of each test case, if needed. In doing that task, OEs must strictly follow the following principles:

-

Reuse existing knowledge. Reusing concepts and relations defined in other models is encouraged and often labelled as a common good practice . The reuse can result either in including external entities in the current model as they are or in providing an alignment or an harmonisation with another model.

-

Document it. Adding annotations – i.e., labels (i.e., rdfs:label), comments (i.e., rdfs:comment), and provenance information (i.e., rdfs:isDefinedBy) – on ontological entities, so as to provide natural language descriptions of them, using at least one language (e.g., English). It is an important aspect to take into consideration, since there are several tools available, e.g., LODE , that are able to process an ontology in source format and to produce an HTML human-readable documentation of it starting from the annotations it has specified.

-

Take advantages from technologies. When possible, enriching the current model by using all the capabilities offered by the formal language in which it is developed – e.g., when using OWL 2 DL: keys, property characteristics (transitivity, symmetry, etc.), property chains, inverse properties and the like – in order to infer automatically as much information as possible starting from a (possible) small set of real data. In particular, it is important to avoid over-classifications, for instance by specifying assertions that may be automatically inferred by a reasoner – e.g., in creating an inverse property of a property P it is not needed to define explicitly its domain and range because they will be inferred from P itself.

Finally, once the refactor is finished, OEs have to run the model test, the data test and the query test on all the test cases in BoT. This is an crucial task to perform, since it guarantees that the refactoring has not damaged any existing conceptualisation implemented in the current model.

Output of an iteration

Each iteration of SAMOD aims to produce a new test case that will be added to the bag of test cases (BoT). Each test case describes a particular aspect of the same model, i.e., the current model under consideration after one iteration of the methodology.

In addition of being integral part of the methodology process, each test case represents a complete documentation of a particular aspect of the domain described by the model, due to the natural language descriptions (the motivating scenario and the informal competency questions) it includes, as well as the formal implementation of exemplar data (the ABox) and possible ways of querying the data compliant with the model (the set of formal queries). All these additional information should help end users in understanding, with less effort, what the model is about and how they can use it to describe the particular domain it addresses.